Context in LLMs and the blockchain

Large language models confront superficially similar challenges to those encountered by blockchains, but big differences emerge the closer you look.

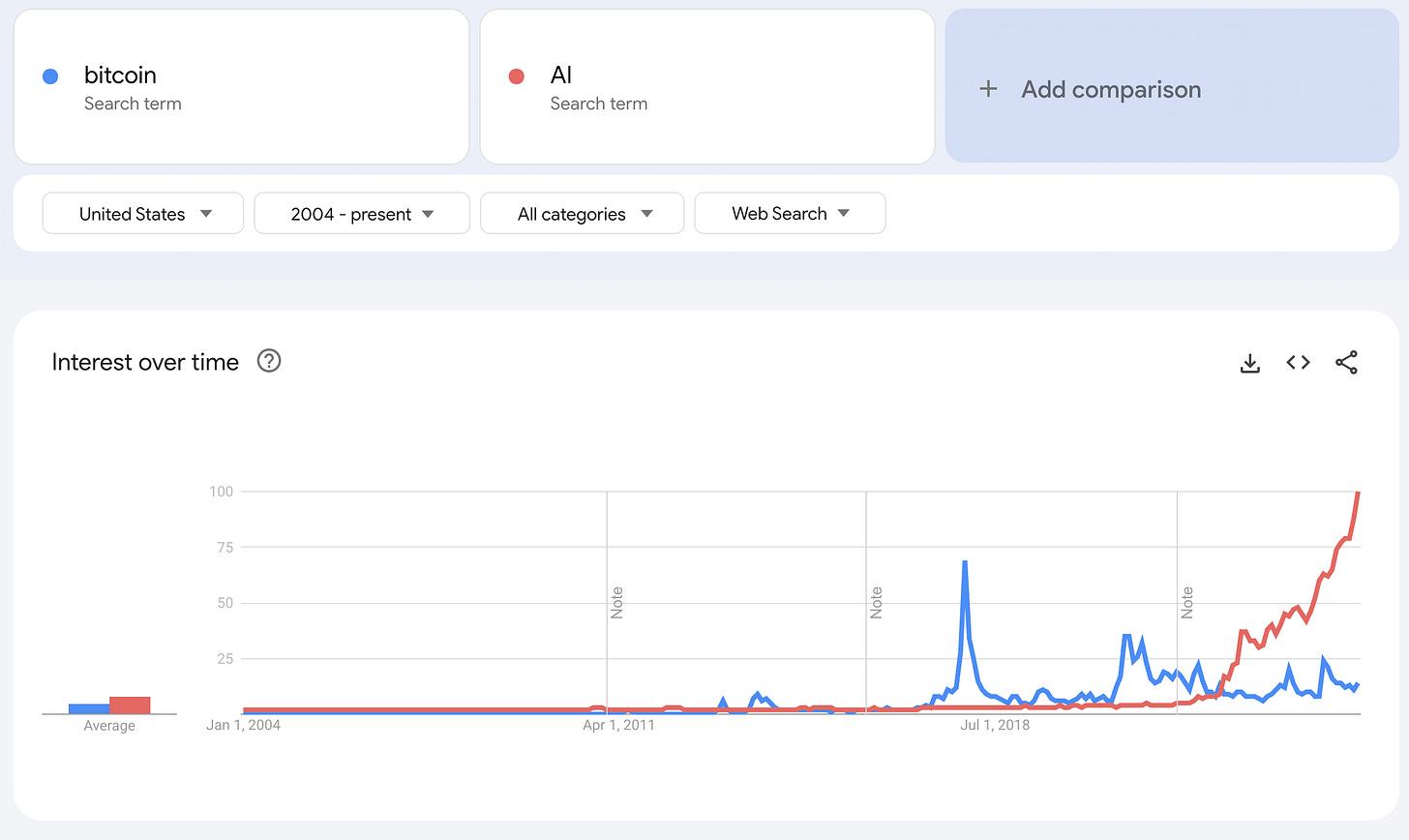

Artificial intelligence and blockchains represent two of the hottest technology trends of the past decade. “Bitcoin” vs. “AI” is not exactly an apples-to-apples comparison1, but you can nevertheless spot the relative interest in the two terms over time using Google Trends:

It’s hard to remember now just how over-the-top blockchain hype once was, but here’s one anecdote: at the end of 2017, the Long Island Iced Tea company rebranded to “Long Blockhain Corp,” and its stock went up 200%.

We’re at a similar level of euphoria with AI right now (if not even higher, as evidenced by the chart above). But outside of NVIDIA, which has hit paydirt with both cycles, there is very little the two technologies have in common. Nevertheless, there is one architectural similarity that I’ve been thinking about recently.

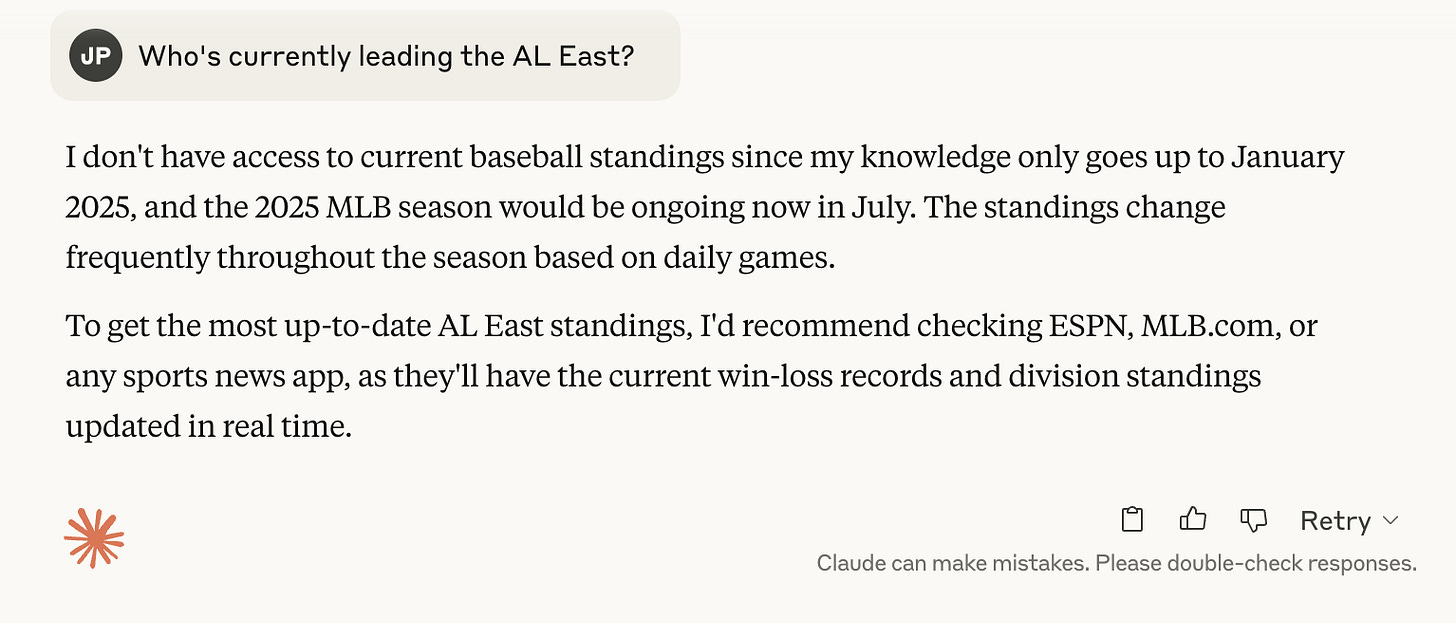

Large language models (LLMs) like ChatGPT, Claude, and Gemini come with an expiration date baked in. When Anthropic’s Claude (with web search turned off) tells you “my knowledge only goes up to January 2025," it's highlighting a core limitation: LLMs are created by training on enormous text datasets. This “pre-training” process is extremely costly, GPU-intensive, and time-consuming, which is a big part of why many models are only updated every few months or so.

In a sense, the moment a model goes live, it’s already out of date: world events continue to transpire, sports teams rack up wins and losses, businesses go bankrupt, and so on, and yet the model is frozen in time — aware only of whatever has been written leading up to its creation.

So there’s a weird dichotomy where a standalone LLM can write cogently on some of the most complex and difficult issues humans encounter — as long as it’s a historical or evergreen topic — and yet be totally unable to answer a simple question about which soccer team was crowned champion in the most recent Premier League season.

Echoes of the blockchain

Ugh, I know. I’ve written enough about crypto, right? But hear me out.

Blockchains face a superficially similar challenge to LLMs. A blockchain can tell you with mathematical certainty that a piece of information hasn't been tampered with once it’s been added to the ledger. In this way it solves the "double-spending problem" by maintaining an immutable record of transactions. But it tells you absolutely nothing about whether the original data was accurate to begin with.

If someone writes "The sky is green" to a blockchain, the blockchain will faithfully preserve that statement forever. It can prove that nobody changed it to "The sky is blue" after the fact. But on its own, it can't tell you whether the sky is actually green or blue.

Enter context engineering

With blockchains, this is where oracles come in. Oracles are external data sources that feed real-world information, or context, into blockchains. (In the non-blockchain world, this functionality is more accurately and concisely called APIs.) They're the mechanism by which blockchains learn about stock prices, weather conditions, sports outcomes, or any other external events that smart contracts might need to respond to.

Combining this “IRL” data with the blockchain’s (near-) immutability is what empowers “smart contracts” (which, as I’ve covered previously, are neither smart nor contracts) to adjudicate transactions based on events exogenous to the blockchain itself.

In the world of LLMs, the foundation model companies (primarily OpenAI, Anthropic, and Google) have solved the context problem through external data integrations. Web search capabilities (these days, this is mostly enabled by default) allow models to access current information from the internet — much like Google Search.

The Model Context Protocol (MCP), introduced by Anthropic late last year, standardized the way LLMs connect to both public and private data sources. These capabilities transform LLMs from impressive but isolated reasoning engines into powerfully dynamic systems that can apply their intelligence to real-world, up-to-date information.

The overarching set of tools that inject updated, relevant information into LLM prompts is known as Retrieval-Augmented Generation (RAG), and it allows models to query databases, documents, or any other information that would be otherwise unavailable to the static model itself.

This cocktail is genuinely transformative. A model that can reason about complex scenarios as well as accessing current data becomes exponentially more useful than either capability alone — it starts to more closely resemble a human colleague.

You can see where I’m going here. In other words, like large language models, blockchains are isolated artifacts, detached from the real-world dynamics that surround them. In order to enrich blockchains or LLMs with updated data, methods emerged for bridging the interior world of the model or blockchain with the messy external universe of dynamic, realtime data.

But there are some key differences

There are two major differences, however, that render this superficially apt comparison rather ill-suited to reality.

First, unlike blockchains, LLMs were not ushered into existence under the ideological banner of opposition to trust in authorities. To the contrary, LLMs are, if anything, too credulous: they achieve something resembling reason by vacuuming up terabyte upon terabyte of digitized text. (“Indiscriminately” may be too prejudicial a term, but it’s not that far off.)

In the early days, blockchains were often lauded as a bulwark against the arbitrariness of human-generated policy decisions (most notably monetary policy). LLMs, by contrast, consist entirely2 of human-generated content.

This has always been the central contradiction of blockchain oracles, which I wrote about in 2021:

Oracle providers rely on a marketplace of data sources — for example, the Associated Press can be queried for election results via API (there’s composability again!) — to bring external data into the blockchain. That means the data has not been subjected to the same consensus validation methodology central to web3.

In short, it means you have to trust someone. And like anything that involves trusting other people, sometimes that trust turns out to be misplaced. That is, when the oracle itself is compromised, so is your transaction that relies on it.

A smart contract, therefore, isn’t very smart at all. The universe of data types that can be validated trustlessly on the blockchain is infinitesimally small, but to expand that universe requires relying on external institutions like those dirty hippies over at the Associated Press.

Enriching LLM chats with additional real-world context generates no such ideological dissonance. There are no AI purists decrying the practice of making LLMs more useful. (If you think this is a straw man, behold the Bitcoin block size debate.)

Secondly, and perhaps even more importantly, LLMs are astoundingly useful tools all on their own, with or without additional context. They can reason, analyze, write, code, and solve problems using their trained knowledge. Yes, adding real-time data amplifies their existing capabilities dramatically, but for a long time after ChatGPT’s introduction in November 2022, the predominant use of LLMs was to effectively chat with the cryogenically preserved state of the Internet as of whatever cutoff date existed in the most recent models. And millions of people (myself included) found this extremely useful! Don’t take my word for it: just check out StackOverflow activity over time.

Blockchains, by contrast, have extraordinarily limited functionality without oracles. They are software sandboxes. The original Bitcoin blockchain contains effectively no data other than which coins were sent from and to which wallet addresses. The Ethereum blockchain (and the many others in the same vein that have spawned since) is more advanced, but ultimately suffers from the same “sky is green” problem.

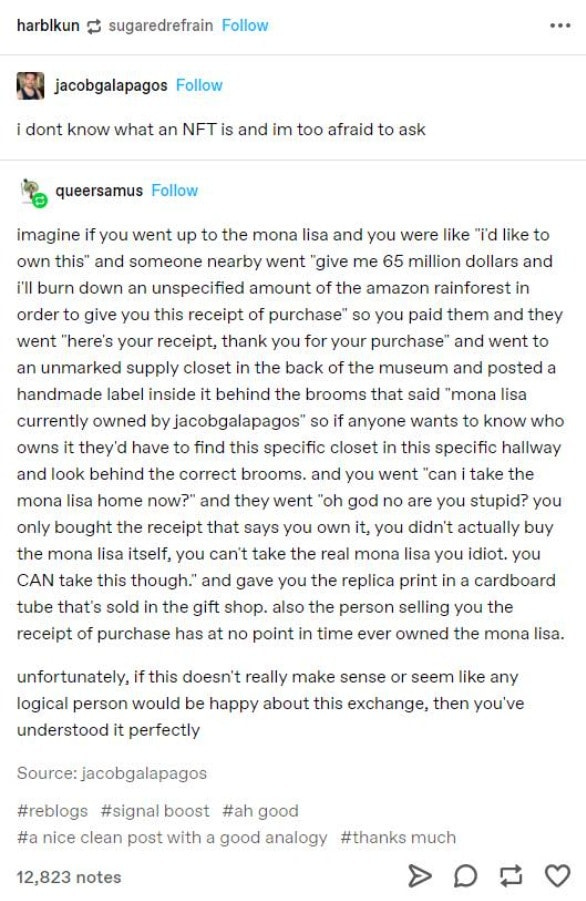

In my 2021 post, I included a widely shared meme about non-fungible tokens (NFTs), which were once heralded by crypto enthusiasts as the future of art. As the meme makes clear, it is very difficult to derive genuine value on the blockchain when it cannot natively validate even the most basic external facts (for example, who actually owns the Mona Lisa):

So do both LLMs and blockchains require additional context to achieve their fullest potential? Yes. But unlike the blockchain, LLMs boast plenty of productive functionality all on their own.

(Note: This post, perhaps unsurprisingly given the topic, was a co-creation between myself and AI — specifically, Anthropic’s Claude.)

Blockchains ≠ crypto ≠ Bitcoin. (I would have extended this to “web3” as well, but nobody knows what that even is.)

Well, not anymore. The ouroboros is nigh upon us.

Eight years on from 2017, did blockchains ever see a real application outside of cryptocurrencies? The zero-trust model is neat and all but in practice blockchains are so inefficient that trust is usually much cheaper to establish in conventional ways.