A smarter way to measure transcript accuracy

TLDR: I've built a Transcript Accuracy Analyzer app to evaluate accuracy in speech-to-text ASR models. (Warning: this gets pretty in the weeds.)

One-Sentence Takeaway: I’ve built a free and open-source Transcript Accuracy Analyzer app, which you can use to evaluate the accuracy of automatic speech recognition providers like Google, AWS, and OpenAI’s Whisper: transcripts.streamlit.app.

Background: the automatic speech recognition landscape

ChatGPT isn’t the only consequential model OpenAI has launched recently.

Just under a year ago, OpenAI released Whisper, an open-source automated speech recognition (ASR) model. About half a year later, they released an API for it too. I wrote about using it last month:

This was a pretty big deal. Whisper’s accuracy is extremely high, and OpenAI gave away the entire model for free. (Even the API version costs only $0.36 for an hour of transcription.)

On a practical level, most people don’t have GPUs with sufficient juice to quickly transcribe large files on their local computer, but the fact that it is technically possible to do for free effectively caps the market price of transcription more broadly.

Or at least, it should. Reality is more of a mixed bag. AWS Transcribe and Microsoft Cognitive Speech Services rates have stayed stubbornly high (around $1 - $1.50 / hour). However, Google Speech-to-Text now has options priced at half of the Whisper API’s ($0.18 / hour) or even less, and Deepgram offers transcription for $0.26 / hour.1

All this to say, it’s an exciting time for consumers of fast, affordable automatic speech recognition. But how good are the transcripts themselves?

This is a surprisingly difficult question to answer. The canonical ASR accuracy metric is Word Error Rate (WER): it takes a gold standard transcript — typically one that was manually written by a human — and then compares an ASR version to it, tallying up all the insertions, substitutions, and deletions and then dividing by the word count.

This method has some obvious limitations: a single erroneous word may be nearly inconsequential — think “the cat entered the house” vs. “a cat entered the house” — or, conversely, rather catastrophic: “the cat entered the house” vs. “the car entered the house.” Both of these errors would produce the same WER of 20% relative to the correct transcript (1 substitution divided by 5 words), but the second error is clearly far more substantial.

This started to bother me as I experimented with more ASR service providers. I had an intuition that some models were clearly producing much higher-quality transcripts than other ones, but when I used Python libraries to calculate the WER, the results were often inconclusive, even when the differences were obvious to the naked eye.

What if, instead of simply counting the word differences between transcripts, I could account for the severity of the errors somehow?

Using text embeddings to measure accuracy

This gave me an idea2: use text embeddings, a key feature of large language models (LLMs), to measure the similarity between two differing sections of text. Text embeddings are basically a mathematical representation of semantic meaning: they turn words, phrases, and sentences into extremely high-dimensional3 numerical arrays in which similar texts are clustered together and dissimilar texts are spaced far apart.

That way, using cosine similarity, I can actually quantify just how much worse the mis-transcription “the car entered the house” is relative to “a cat entered the house.”

But first, the transcripts have to be normalized. There are numerous possible ways to go about this, but all methodologies flow from the same general concept, which is that an evaluation of ASR accuracy should focus mostly or entirely on word accuracy, not capitalization, punctuation, spacing, numerical formatting (e.g. “100” vs. “one hundred”), and so on. The normalization process standardizes both transcripts to a specific format — for example, all words may be lower-cased and all numbers converted to either digit or word format, and so on — so they can be compared on word accuracy alone.

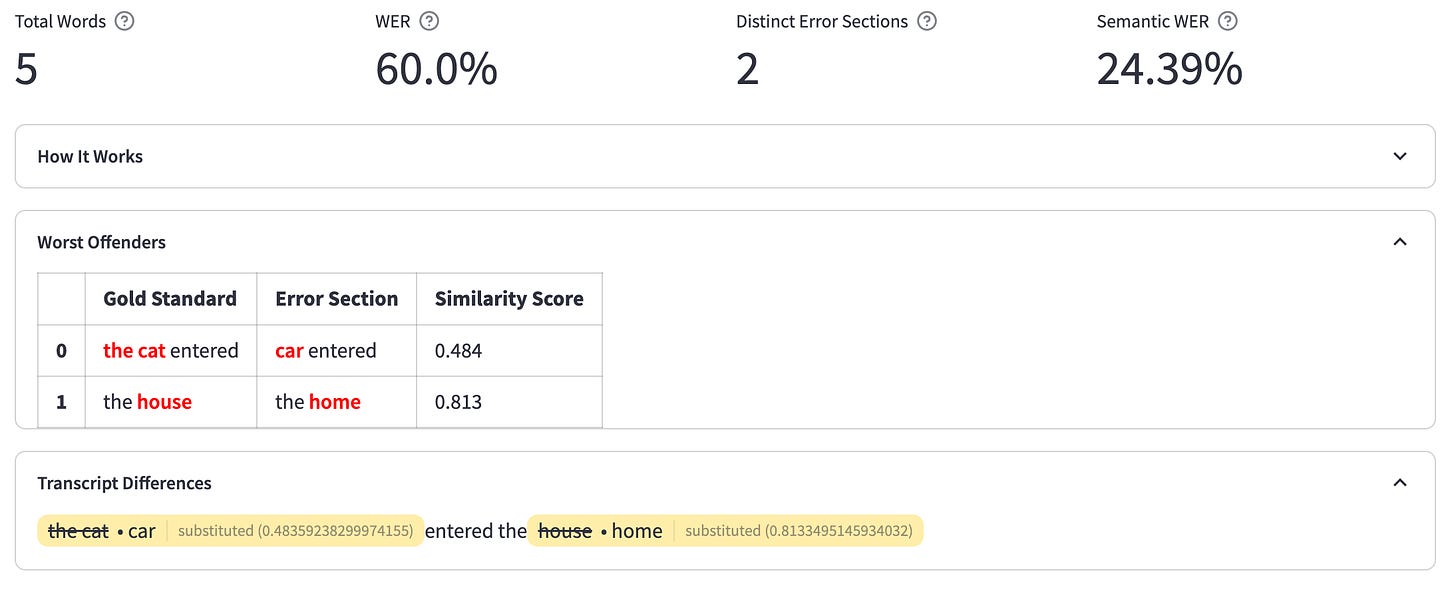

After normalization, I compare the ASR transcript to the gold standard one and combine all the “error sections.” For example, if the gold standard transcript says “the cat entered the house” but the ASR one says “car entered the home,” the Transcript Accuracy Analyzer (TAA) app combines the first two word errors (deletion of “the” and substitution of “cat” with “car”) into one section because they’re directly adjacent to each other, but treats the later substitution of “house” for “home” as a separate error section:

This simplifies the analysis by slimming down transcripts to two types of text sections: ones in which the text is identical between the two transcripts and ones where the text differs (error sections).

Then, for each error section, I embed the differing texts (plus, for some error sections, neighboring text on either side4), calculate their adjusted similarities5 to determine just how different their meanings are, and list the top 20 “worst offenders” in a list.

Introducing Semantic WER

This is where things get interesting: how should these similarity scores be incorporated into an overall accuracy metric?

I looked into several possibilities. One metric that’s long existed, and was designed as a sort of more sophisticated version of WER, is Word Information Lost (WIL). WIL is calculated as:

…where H = the number of correct words present in both transcripts, N is the total number of words in the gold standard transcript, and P is the total number of words in the ASR transcript. (The rightmost section of the above equation is the same thing presented slightly differently, in which S = substitutions, D = deletions, and I = insertions.)

Was there a way to modify WIL to incorporate semantic similarity? I came up with a number of alternative versions, but ultimately none were particularly satisfying or (crucially) easily explainable.

Instead, I decided to go with the simpler and more accessible option of simply modifying WER by multiplying each error section by the cosine dissimilarity between the gold standard transcript and the ASR one.6 For example, going back to “the cat entered the house” (gold standard) versus “a cat entered the house” (ASR 1) vs. “the car entered the house” (ASR 2), both ASR versions still have a 20% WER, but now (using TAA’s default settings) ASR 1 has a Semantic WER of 2.87% while ASR 2’s is more than double that, at 6.05%. This is closer to what one would expect.

One obvious question might be: why go to all this trouble? Even if classic WER isn’t a great metric, isn’t it good enough? As long as the ASR transcript isn’t truly atrocious, anyone reading it should be able to grasp the overall meaning in most cases, right?

Well, maybe. But one big thing that’s changing now is that the consumers of transcribed audio are not necessarily human beings anymore. Transcripts themselves are in many cases simply an invisible intermediary step between the raw audio and a final summary produced by a large language model. (For example: my Podcast Summarizer app.)

So even if humans can manage to figure out the underlying concepts from a badly transcribed podcast episode, there’s no guarantee an LLM can — in which case there is no better way to capture the lost semantic meaning between two transcripts than to use the same embedding model powering the most well-known LLM (ChatGPT). Choosing an ASR transcript provider with a low Semantic WER could thus mean the difference between a high-quality summary and a nonsensical one.

The Transcript Accuracy Analyze app is free to use and hosted on Streamlit, and the code is open-source. Feel free to fork it, file PRs, and create GitHub issues. Also, let me know if you see any bugs, errors, or problems.

How ASR providers stack up on the Transcript Accuracy Analyzer

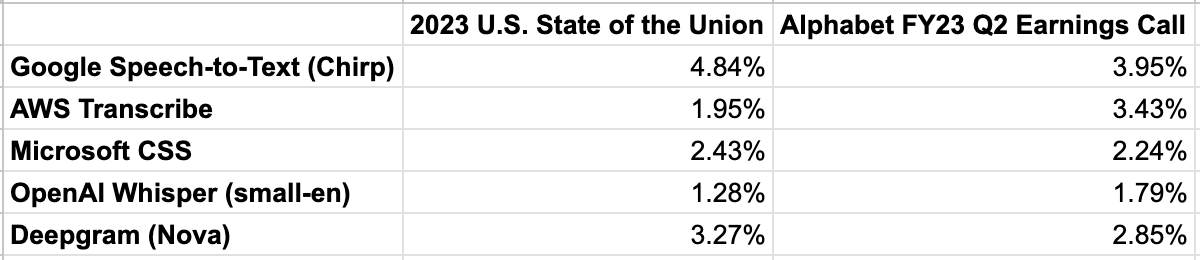

Based on an extremely small sample of transcripts (n=2, heh), what it appears to show is that the major cloud providers are not the state of the art when it comes to transcript accuracy.

Take the 2023 U.S. State of the Union, for example. I used The New York Times’ transcript as the gold standard (not the official White House version, as it excluded Joe Biden’s improvised lines) and then requested transcripts from major ASR providers using the audio from the NBC News broadcast.

I did a similar thing with Alphabet’s recent Q2 earnings call.

Here’s how they stacked up on Semantic WER:

It’s interesting that Google performs the worst of the providers I tested (even on its own corporate parent’s earnings call!), especially since it just announced its state-of-the-art speech-to-text transcription model, called Chirp, less than three months ago, and placed it in general availability starting…tomorrow. 👀

Perhaps unsurprisingly, Whisper comes in on top for both transcripts. This comports with my anecdotal sense that Whisper generally performs best of all the models I’ve used.

Next steps

Other areas of potential future research I’m exploring include:

Switching to a more accurate embedding model. I’m currently using OpenAI’s “text-embedding-ada-002” model, which powers ChatGPT, but this is actually only the 13th-most-accurate embedding model, per Hugging Face’s Massive Text Embedding Benchmark (MTEB) Leaderboard.

Experimenting with different normalization techniques (especially for numbers, dates, ordinals, British-vs.-American English, etc.). This is important not simply for calculating WER but also because embeddings are highly dependent on the relation of their constituent tokens. As one example, a normalization that removes apostrophes would transform “we’re” into “were,” which would have a very different embedding value because it means something entirely different. OpenAI discusses its normalization methodology in Appendix C of its Whisper whitepaper and also open-sources the normalization code on GitHub. Google has published some guidelines as well.

Building a far more systematic accuracy analysis using a large dataset of audio files and gold standard transcripts, such as LibriSpeech. As a widely accepted corpus, LibriSpeech should avoid many of the problems common to gold standard transcripts culled from open-web sources (like the sample ones in my TAA app): they may not be fully accurate (e.g. they may remove filler words or insert editorial content like “(applause)” or “[inaudible]”) or they may in some cases be AI-generated or -assisted themselves.

Trying out the full array of settings available on the various ASR services, such as ignoring filler words or using different number formatting.

There’s a bit of nuance to both the pricing and the features here. Google has a bunch of different pricing options depending on whether you use V1 or V2 of its API, whether you opt in to sending data back to them to help improve the service, and even how long you’re willing to wait for the transcription: if you’re willing to wait up to 24 hours and send data back to Google, you can transcribe an hour of audio for as little as 13.5¢.

In parallel, some of these services offer different feature sets: for example, Google’s latest-and-greatest ASR model is called Chirp, but it doesn’t currently support diarization (differentiating between different speakers) or language detection.

Turns out I wasn’t the first one to this idea. A pair of Facebook / Meta AI papers recently explored the potential of using sentence-level embeddings to determine ASR performance. But even earlier, a group of scholars from the University Grenoble Alpes proposed a similar concept, which I’ve partially drawn inspiration from in designing my Semantic WER metric. (There are several differences, one of which is that I calculate semantic similarity on insertions, deletions, and substitutions, whereas the UGA folks only calculated it on substitutions.)

The text embedding model used by OpenAI’s ChatGPT outputs 1,536-dimensional vectors.

Error sections contain blank text in one of the transcripts anytime the change is an insertion or a deletion (i.e. not a substitution). For example, if one transcript states 'She walked to the office' and the second transcript states 'She walked to office', the error section for the second transcript would be blank, as the word 'the' is not present. Because an empty text string cannot be vector-embedded or compared to the embedding of another string, it is necessary to include surrounding words in that case to ensure that the context of the differing sections is accounted for when determining semantic similarity. The minimum word threshold can be adjusted in TAA’s advanced settings.

For seemingly complex technical reasons, even though the actual maximum range of cosine similarity is -1 to 1, in practice most word, phrase, and sentence pairs embedded by OpenAI’s “text-embedding-ada-002” model have a cosine similarity between 0.7 and 1.0. To enable a more usable Semantic WER, the default TAA settings treat 0.7 to 1.0 as the entire range of possible semantic similarity. (Check out the “How this works” section on the site for more details.)

This has the side effect that WER is the ceiling for Semantic WER: no ASR transcript can ever have a Semantic WER higher than classic WER. A potential future version of Semantic WER may allow for negative similarity scores, enabling the possibility of a Semantic WER higher than its classic WER counterpart.